This article was originally published in the CERN Courier.

The High-Luminosity Large Hadron Collider (HL-LHC), due to operate in around 2026, will require a computing capacity 50–100 times greater than currently exists. The big uncertainty in this number is largely due to the difficulty in knowing how well the code used in high-energy physics (HEP) can benefit from new, hyper-parallel computing architectures as they become available. Up to now, code modernisation is an area in which the HEP community has generally not fared too well.

We need to think differently to address the vast increase in computing requirements ahead. Before the Large Electron–Positron collider was launched in the 1980s, its computing challenges also seemed daunting; early predictions underestimated them by a factor of 100 or more. Fortunately, new consumer technology arrived and made scientific computing, hitherto dominated by expensive mainframes, suddenly more democratic and cheaper.

A similar story unfolded with the LHC, for which the predicted computing requirements were so large that IT planners offering their expert view were accused of sabotaging the project! This time, the technology that made it possible to meet these requirements was grid computing, conceived at the turn of the millennium and driven largely by the ingenuity of the HEP community.

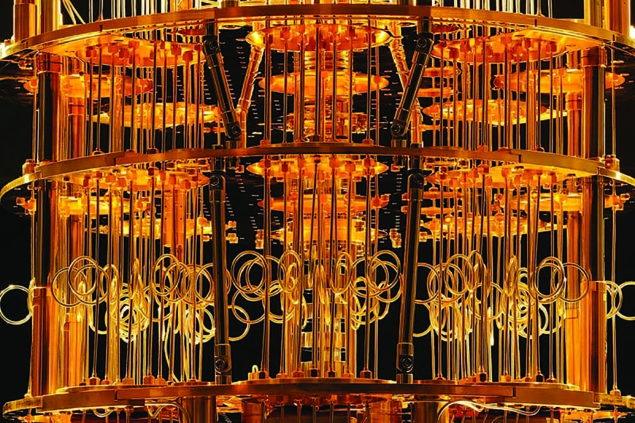

Looking forward to the HL-LHC era, we again need to make sure the community is ready to exploit further revolutions in computing. Quantum computing is certainly one such technology on the horizon. Thanks to the visionary ideas of Feynman and others, the concept of quantum computing was popularised in the early 1980s. Since then, theorists have explored its mind-blowing possibilities, while engineers have struggled to produce reliable hardware to turn these ideas into reality.

Qubits are the basic units of quantum computing: thanks to quantum entanglement, n qubits can represent 2ndifferent states on which the same calculation can be performed simultaneously. A quantum computer with 79 entangled qubits has an Avogadro number of states (about 1023); with 263 qubits, such a machine could represent as many concurrent states as there are protons in the universe; while an upgrade to 400 qubits could contain all the information encoded in the universe.

However, the road to unlocking this potential – even partially – is long and arduous. Measuring the quantum states that result from a computation can prove difficult, offsetting some of the potential gains. Also, since classical logic operations tend to destroy the entangled state, quantum computers require special reversible gates. The hunt has been on for almost 30 years for algorithms that could outperform their classical counterparts. Some have been found, but it seems clear that there will be no universal quantum computer on which we will be able to compile our C++ code and then magically run it faster. Instead, we will have to recast our algorithms and computing models for this brave new quantum world.

In terms of hardware, progress is steady but the prizes are still a long way off. The qubit entanglement in existing prototypes, even when cooled to the level of millikelvins, is easily lost and the qubit error rate is still painfully high. Nevertheless, a breakthrough in hardware could be achieved at any moment.

A few pioneers are already experimenting with HEP algorithms and simulations on quantum computers, with significant quantum-computing initiatives having been announced recently in both Europe and the US. In CERN openlab, we are now exploring these opportunities in collaboration with companies working in the quantum-computing field – kicking things off with a workshop at CERN in November (see below).

The HEP community has a proud tradition of being at the forefront of computing. It is therefore well placed to make significant contributions to the development of quantum computing – and stands to benefit greatly, if and when its enormous potential finally begins to be realised.

- A workshop on quantum computing will take place at CERN on 5–6 November, with technology updates from companies including NVIDIA, Intel, IBM, Strangeworks, D-Wave, Microsoft, Rigetti and Google: https://indico.cern.ch/e/QC18.